Tiikk

work in foreign place emergency

ungradute student at the German company (HIMT)

work in foreign place emergency

Welcome to my blog! Here I'll be posting about all kinds of topics that interest me like biking, hiking, baking and building. And MACHINE LEARNING! There is probably not much here yet, but I promise there will be soon :)

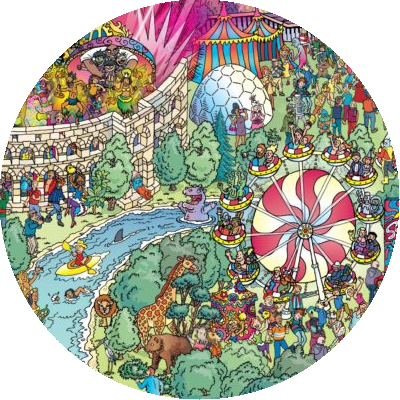

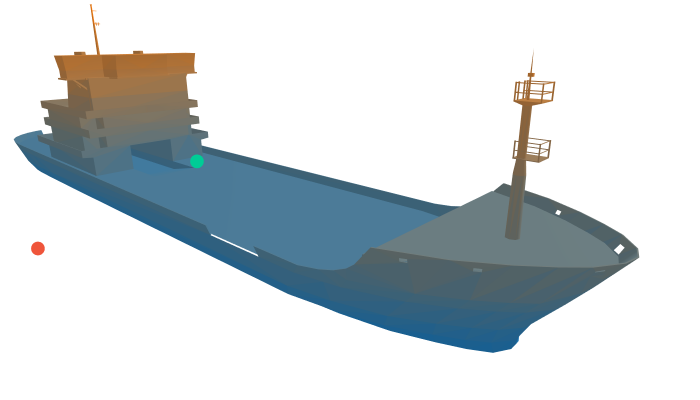

If you work in machine learning, or worse, deep learning, you have probably encountered the problem of too few data at least once. For a classification task you might get away with hand-labeling a couple of thousand images and even detection might still be within manual reach if you can convince enough friends to help you. And then you also want to do segmenation. Even if possible, hand-labeling is an incredibly boring, menial task. But what if you could automate it by rendering photorealistic synthetic training data with pixel-perfect annotations for all kinds of scene understanding problems?

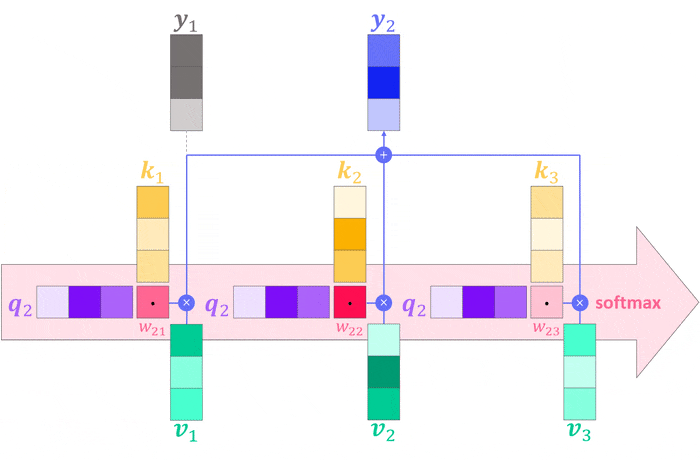

Yet another article on attention? Yes, but this one is annotated, illustrated and animated focusing on attention itself instead of the architecture making it famous. After all, attention is all you need, not Transformers.

A short introduction to the importance of context in machine learning which also serves as an introduction to the upcoming article on attention.

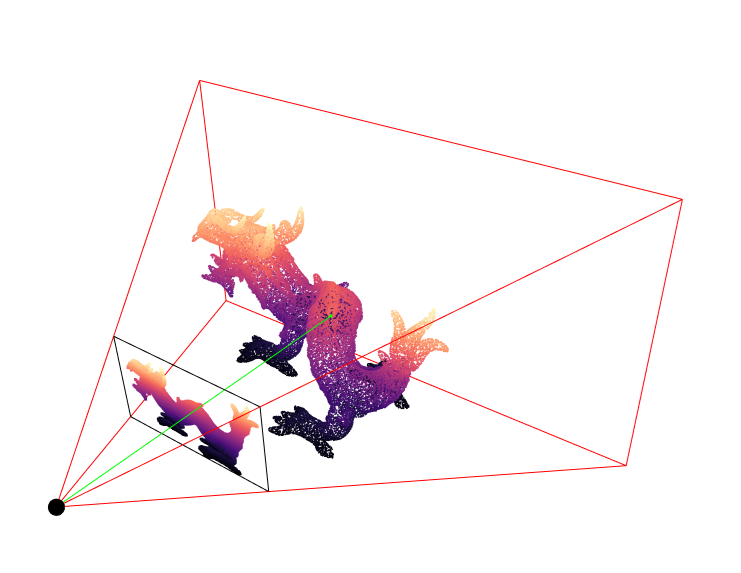

An easy-to-use wrapper around (as well as utility functions and scripts for) some of Open3D's registration functionality.

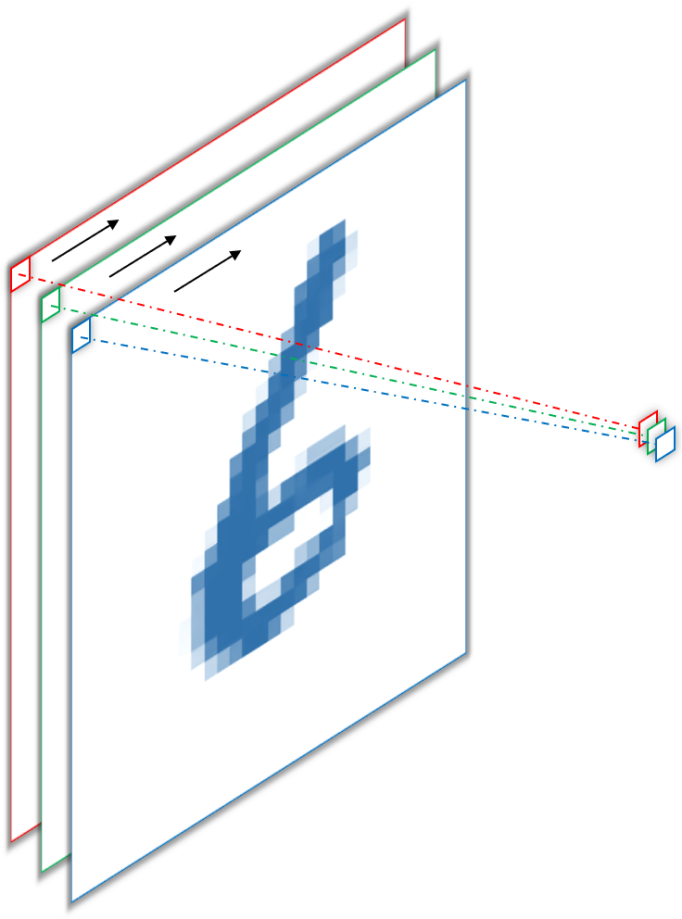

The final post in this four-part series on learning from 3D data. How do we learn from 3D data in this approach? We don't. Instead, we project it into the more familiar 2D space and then proceed with business as usual. Neither exciting nor elegant but embarrassingly simple, effective and efficient.

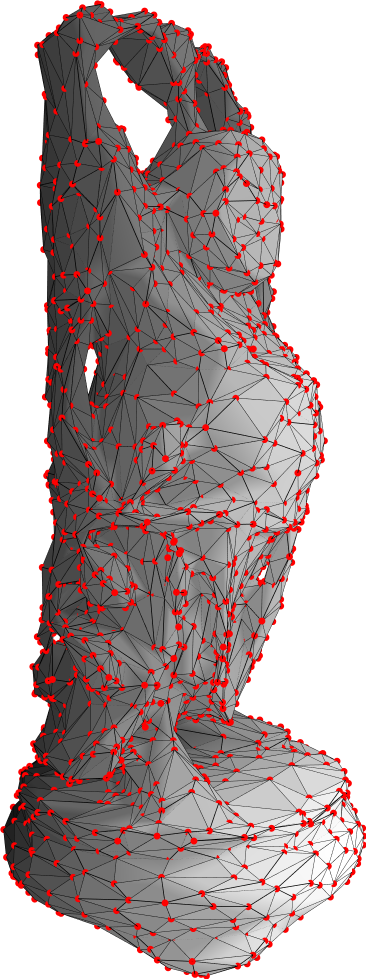

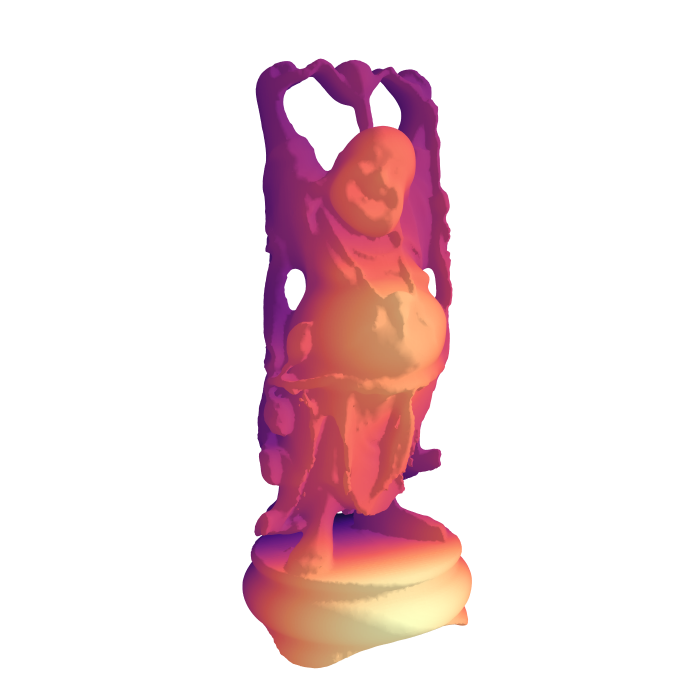

The third way to represent data in 3D. We will learn what a graph is and how it is different from point clouds and voxel grids. Then we will put some butter on the fish (German for "put our money where our mouth is") and look at some implementations of deep learning architectures for graph structured data and their results.

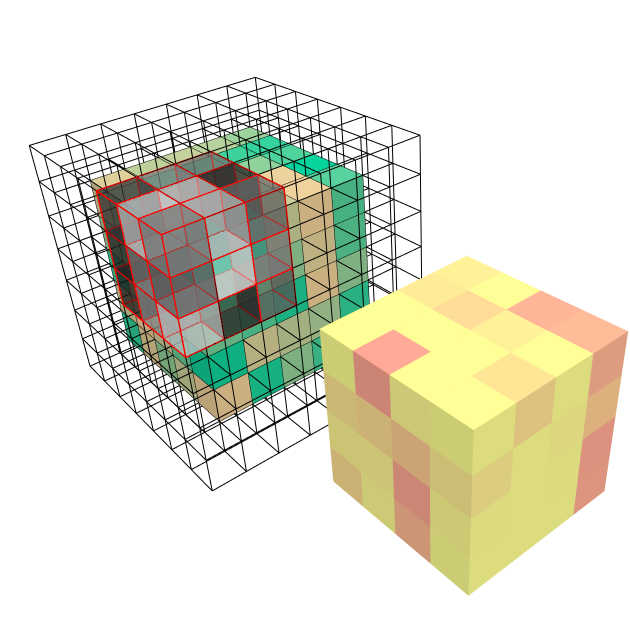

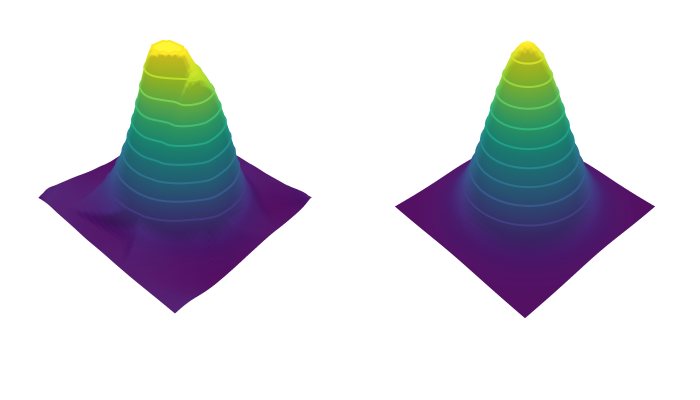

The second post in the series on learning on 3D data. Last time we looked at point clouds; this time we'll be looking at voxel grids. Let's get to it.

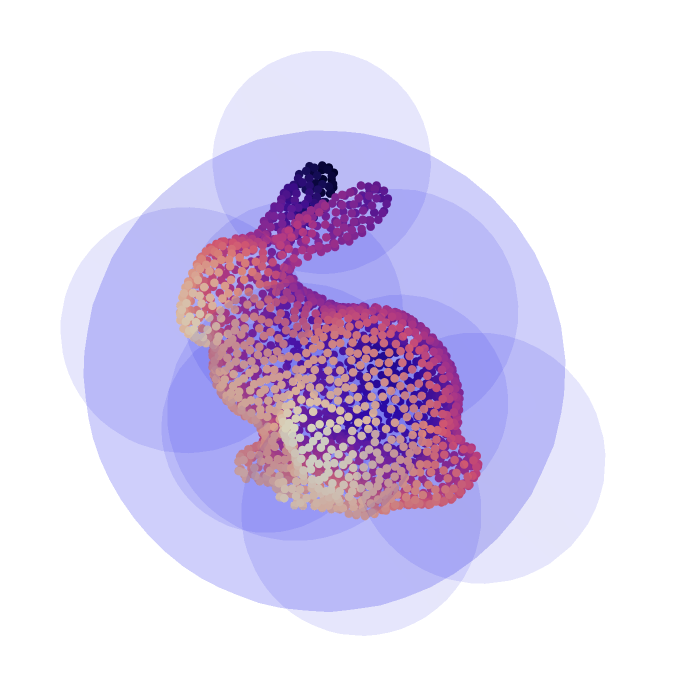

In the previous article we've explored 3D data and various ways to represent it. Now, let's look at ways to learn from it to classify objects and perform other standard computer vision tasks, but now in three instead of two dimensions! This aspires to become a series devoted to various techniques of learning from various types of 3D data, starting with point clouds.

There are many explanations out there trying to convince you of the utility of 1x1 convolutions as bottlenecks to reduce computational complexity and replacements for fully connected layers, but they always glanced over some pretty important details in my opinion. Here is the full picture.

I've recently started working with the institutes robots which perceive their environment not only with cameras but also with depth sensors. Working with the 3D data obtained from these sensors is quite different from working with images and this is the summary of what I've learned so far. How to do deep learning on this data will be covered in the next post.

One-O-one of ATOMIC HABITS by James Clear. For anyone who ever wanted to get anything done.

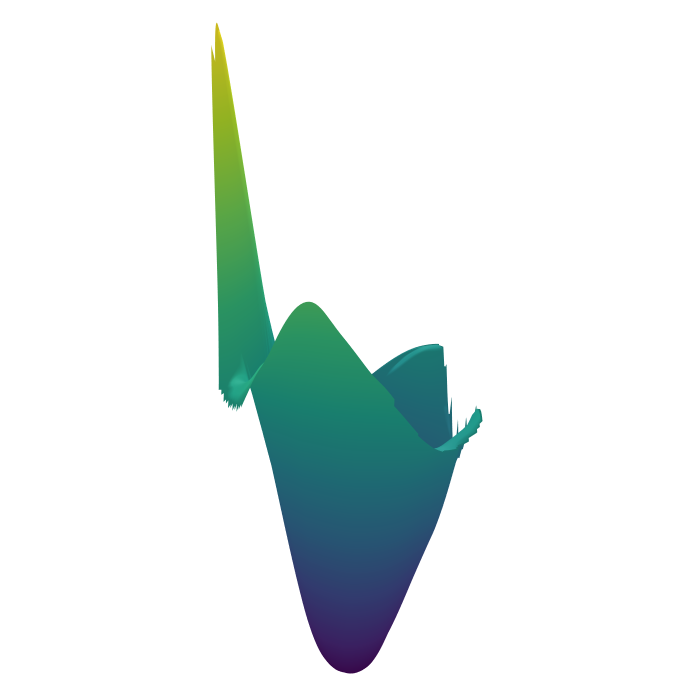

A deep look into Bayesian neural networks from a practical point of view and from theory to application. This is the final part of my informal 3 part mini-series on probabilistic machine learning, part 1 and 2 being "Looking for Lucy" and "A sense of uncertainty".

See what happens when probability theory and deep learning have a little chat. Part 2/3 of my informal mini-series on probabilistic machine learning ("Looking for Lucy" being the first).

My own take on explaining some fundamentals of probability theory, intended as a primer for probabilistic machine learning. In part 1/3 (this article), we have a look at joint, conditional and marginal probabilities, continuous and discrete as well as multivariate distributions, independence and Bayes' Theorem.

How does this blog work? What are all these tagged things? Why GitHub pages? This article answers all of these questions and more!

A list of awesome things on the web I've stumbled upon or have been directed to. Those include great (visual) explanations of complicated topics in machine learning and science in general, software tools and other fun stuff.

I'm still exploring what it means to be a researcher. Here I'll take a look at conferences, which play an important role in my field. I'll also provide a timeline of the most important MACHINE/DEEP LEARNING, ROBOTICS, COMPUTER VISION and ARTIFICIAL INTELLIGENCE conferences including paper submission deadlines.

An overview of DEEP WORK, a book by Cal Newport, intended as a reference for quickly looking up how to get lost in your work rather than in distractions. For people in a hurry there is a 5 minutes summary at the end of the article.

Some thoughts on writing useful articles. Those are mainly things I saw elsewhere and would like to incorporate into my own writing but also things I often miss in otherwise great texts.

An overview of how I imagine my work days to be. In the hope to remember and to keep myself accountable.

An ongoing list of potential PhD topics. I thought it might be helpful to put this list here to motivate me but also to be able to easily share it and potentially to get some input from elsewhere. So feel free to comment if you have any great ideas!

Welcome to my blog! Here I'll be posting about all kinds of topics that interest me like biking, hiking, baking and building. And MACHINE LEARNING! There is probably not much here yet, but I promise there will be soon :)